Databricks

PRODAs per the documentation here, note that we only support metadata tag extraction for databricks version 13.3 version and higher.

In this section, we provide guides and references to use the Databricks connector.

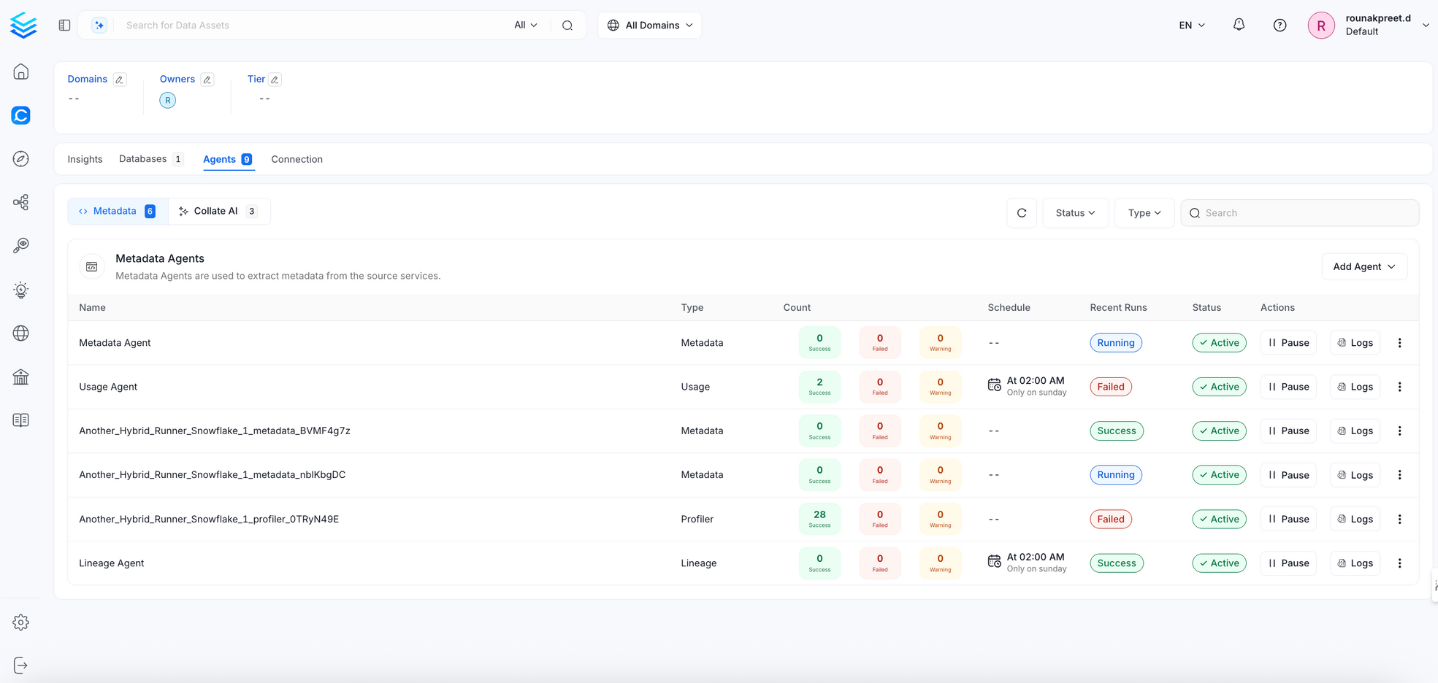

Configure and schedule Databricks metadata and profiler workflows from the OpenMetadata UI:

- Requirements

- Unity Catalog

- Metadata Ingestion

- Query Usage

- Data Profiler

- Data Quality

- Lineage

- dbt Integration

- Troubleshooting

Ingestion Deployment

To run the Ingestion via the UI you'll need to use the OpenMetadata Ingestion Container, which comes shipped with custom Airflow plugins to handle the workflow deployment. If you want to install it manually in an already existing Airflow host, you can follow this guide.

If you don't want to use the OpenMetadata Ingestion container to configure the workflows via the UI, then you can check the following docs to run the Ingestion Framework in any orchestrator externally.

Run Connectors from the OpenMetadata UI

Learn how to manage your deployment to run connectors from the UIRun the Connector Externally

Get the YAML to run the ingestion externallyExternal Schedulers

Get more information about running the Ingestion Framework ExternallyHow to Run the Connector Externally

To run the Ingestion via the UI you'll need to use the OpenMetadata Ingestion Container, which comes shipped with custom Airflow plugins to handle the workflow deployment.

If, instead, you want to manage your workflows externally on your preferred orchestrator, you can check the following docs to run the Ingestion Framework anywhere.

Requirements

Python Requirements

We have support for Python versions 3.9-3.11

To run the Databricks ingestion, you will need to install:

Authentication Types

Databricks connector supports three authentication methods:

- Personal Access Token (PAT): Generated Personal Access Token for Databricks workspace authentication.

- Databricks OAuth (Service Principal): OAuth2 Machine-to-Machine authentication using a Service Principal.

- Azure AD Setup: Specifically for Azure Databricks workspaces that use Azure Active Directory for identity management. Uses Azure Service Principal authentication through Azure AD.

Permission Requirements

The required permissions vary based on the authentication method used:

Personal Access Token Permissions

When using PAT, the token inherits the permissions of the user who created it. Ensure the user has:

Service Principal Permissions (OAuth/Azure AD)

For Service Principal authentication, grant permissions to the Service Principal:

Adjust <user>, <catalog_name>, <schema_name>, and <table_name> according to your specific deployment and security requirements.

Getting Connection Details

Here are the steps to get hostPort, httpPath and authentication credentials:

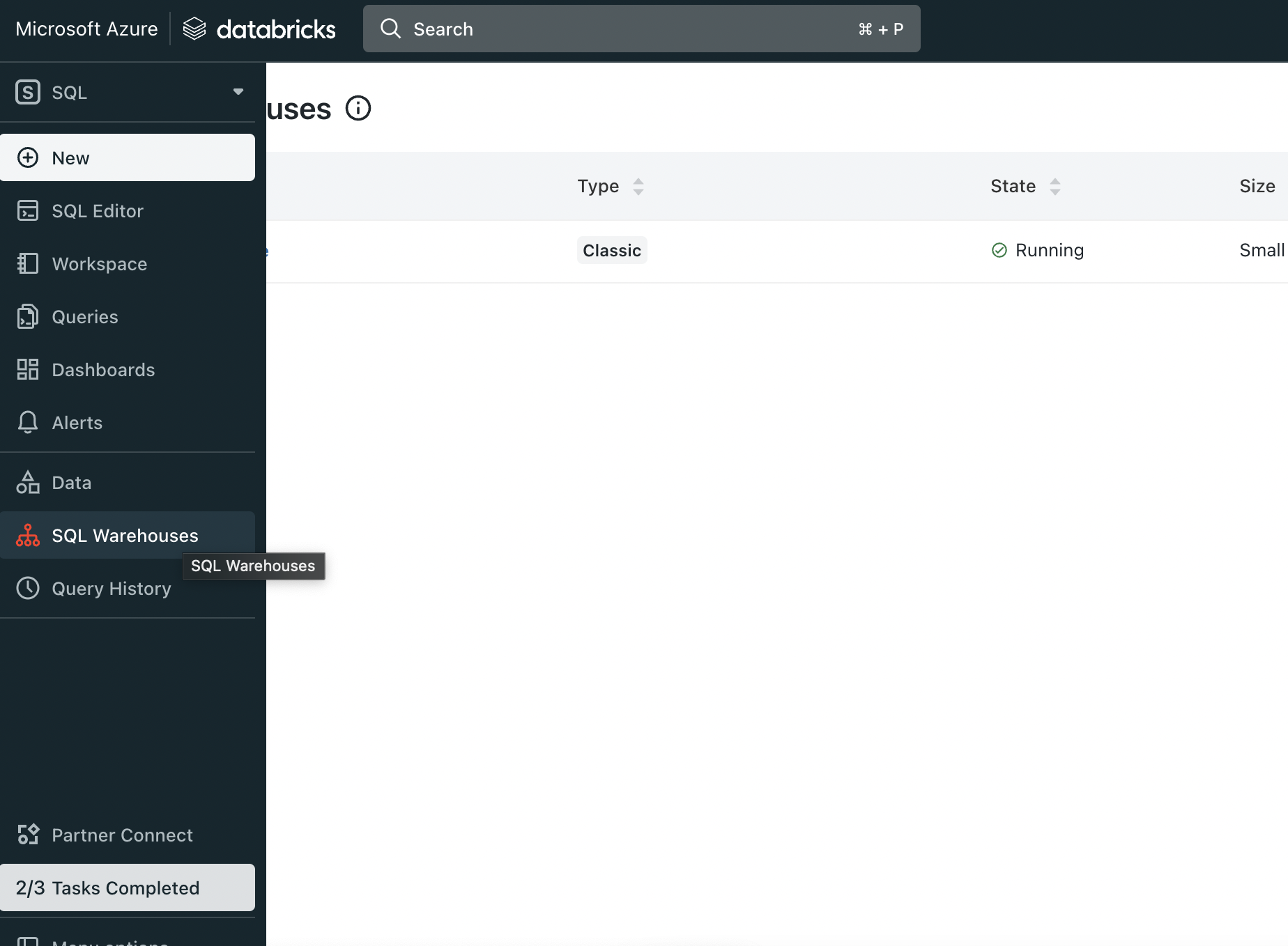

First login to Azure Databricks and from side bar select SQL Warehouse (In SQL section)

Select Sql Warehouse

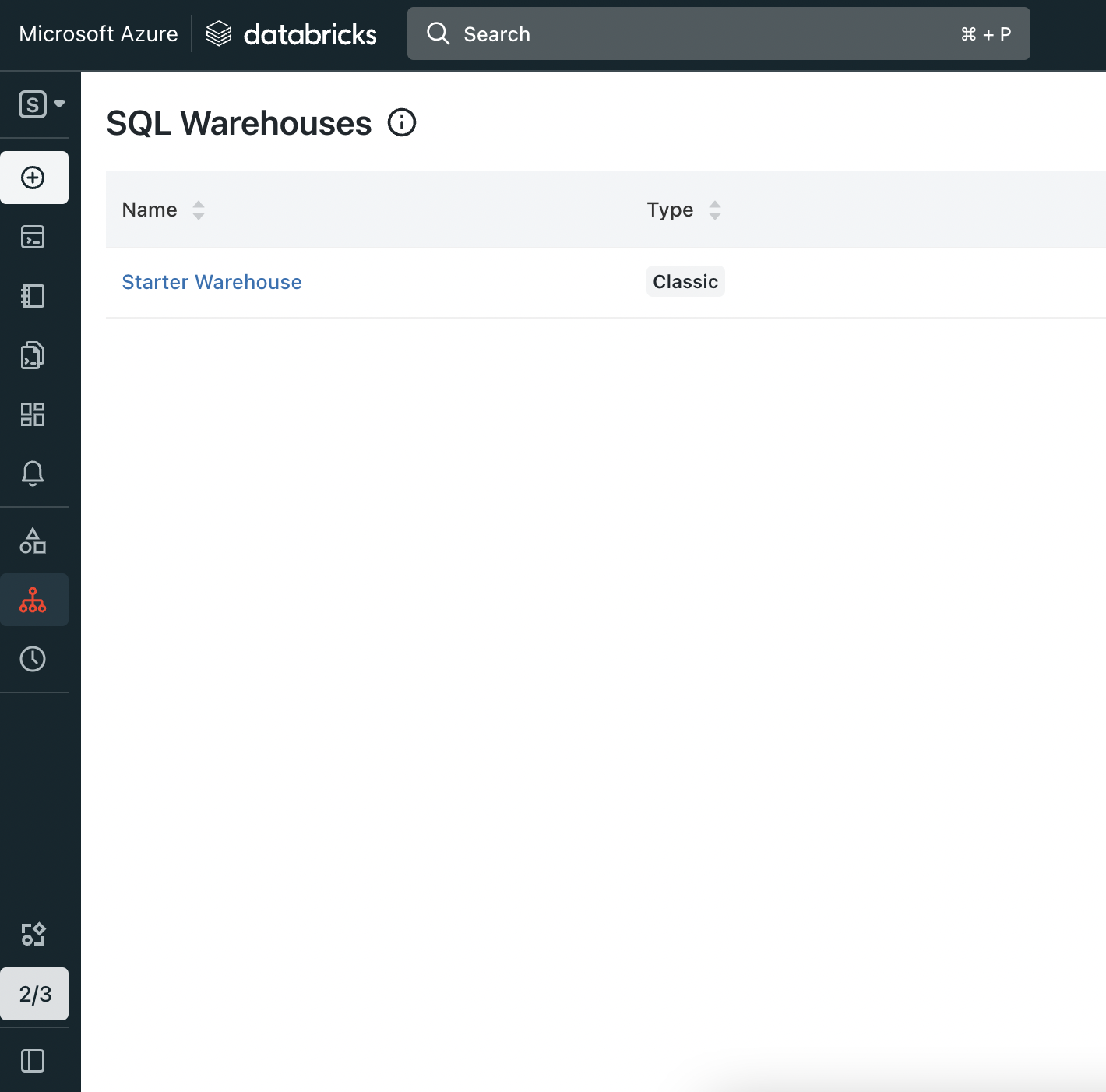

Now click on sql Warehouse from the SQL Warehouses list.

Open Sql Warehouse

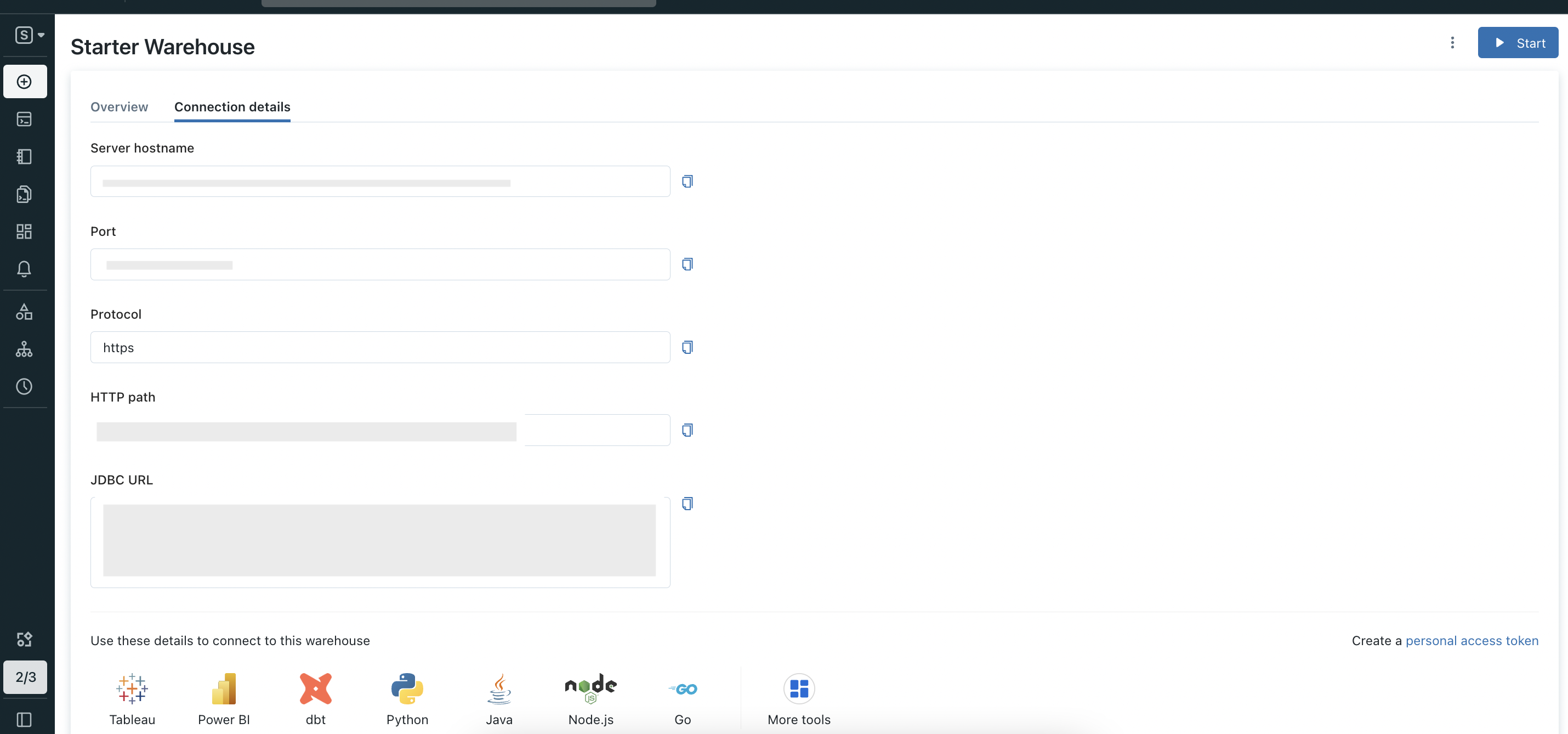

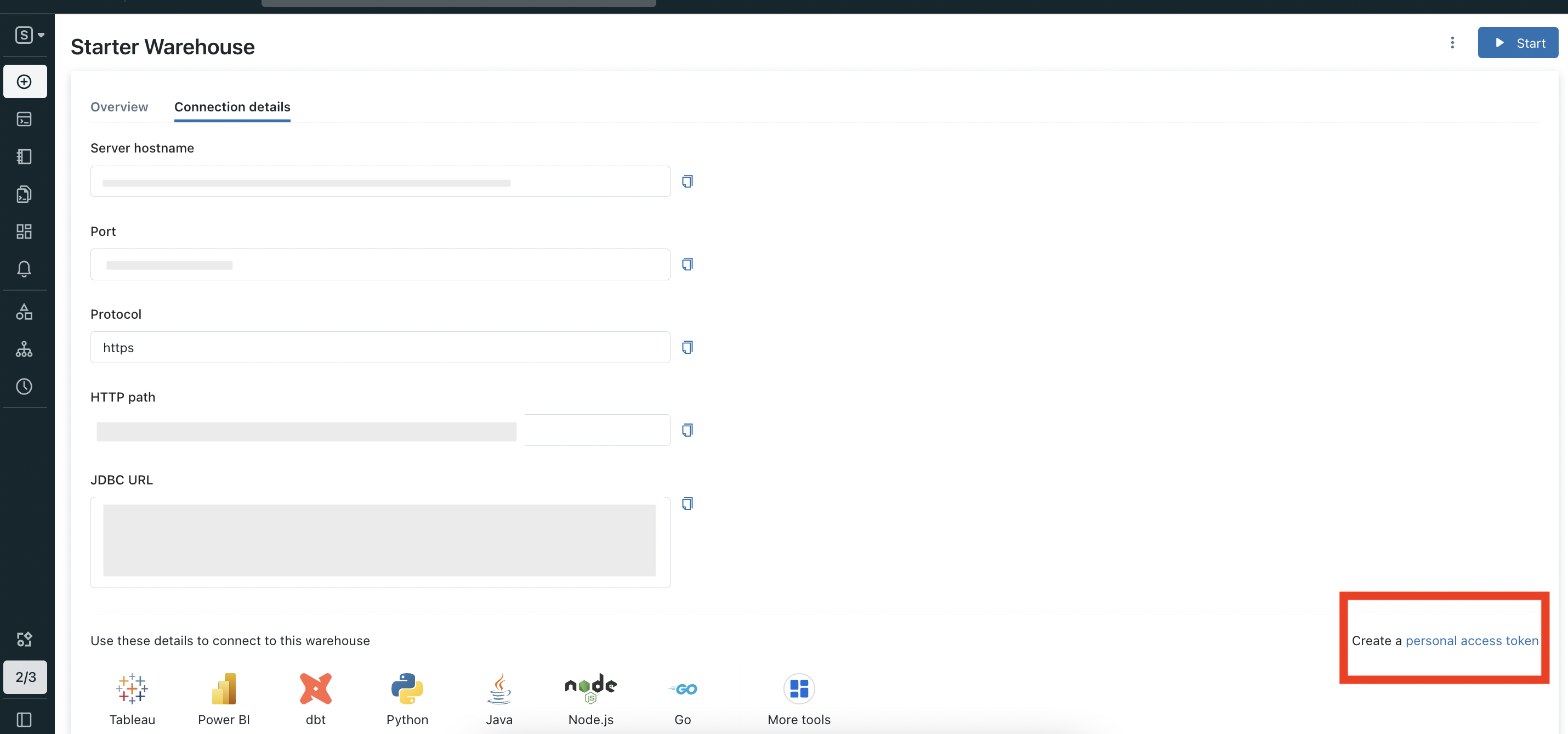

Now inside that page go to Connection details section. In this page Server hostname and Port is your hostPort, HTTP path is your http_path.

Connection details

In Connection details section page click on Create a personal access token.

Open create token

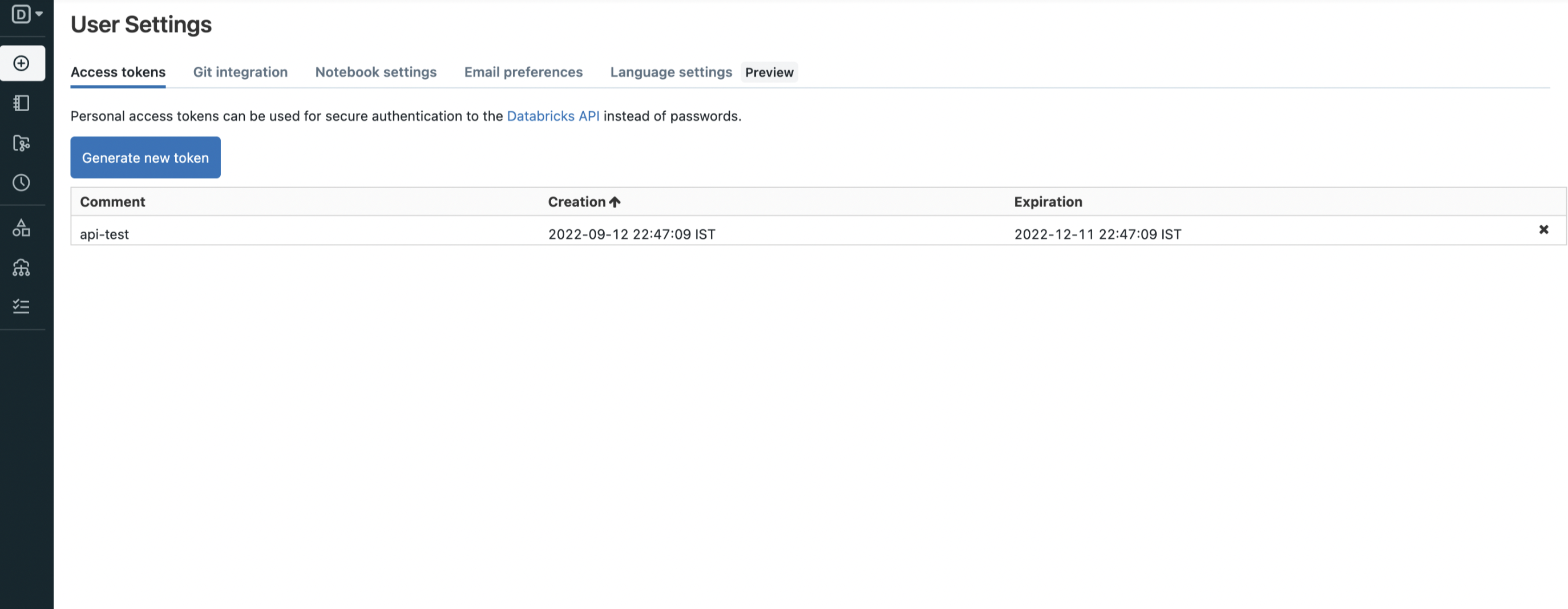

Now In this page you can create new token.

Generate token

Getting Service Principal Credentials

For Databricks OAuth (All Platforms)

- Navigate to your Databricks Account Console

- Go to Settings → Identity and access → Service Principals → Add Service Principal

- Note down the Application ID (this is your

clientId) - Click Generate Secret and save the secret (this is your

clientSecret)

For Azure AD Setup (Azure Databricks Only)

- Go to Azure Portal → Azure Active Directory

- Navigate to Microsoft Entra ID → App registrations → New registration

- After registration, note:

- Application (client) ID (this is your

azureClientId) - Directory (tenant) ID (this is your

azureTenantId)

- Application (client) ID (this is your

- Go to Certificates & secrets → New client secret

- Create and save the secret value (this is your

azureClientSecret) - Navigate to your Azure Databricks Account Console

- Go to Settings → Identity and access → Service Principals → Add Service Principal

- Select Microsoft Entra ID managed option and enter your azureClientId

Common Issues

Authentication Failures

- PAT Issues: Ensure token hasn't expired (max 90 days lifetime)

- Service Principal: Verify the Service Principal has necessary permissions

- Azure AD: Check if Azure Databricks workspace is configured for Azure AD authentication

Permission Errors

- Ensure proper GRANT statements have been executed for your authentication method

Unity Catalog

If you are using unity catalog in Databricks, then checkout the Unity Catalog connector.

Metadata Ingestion

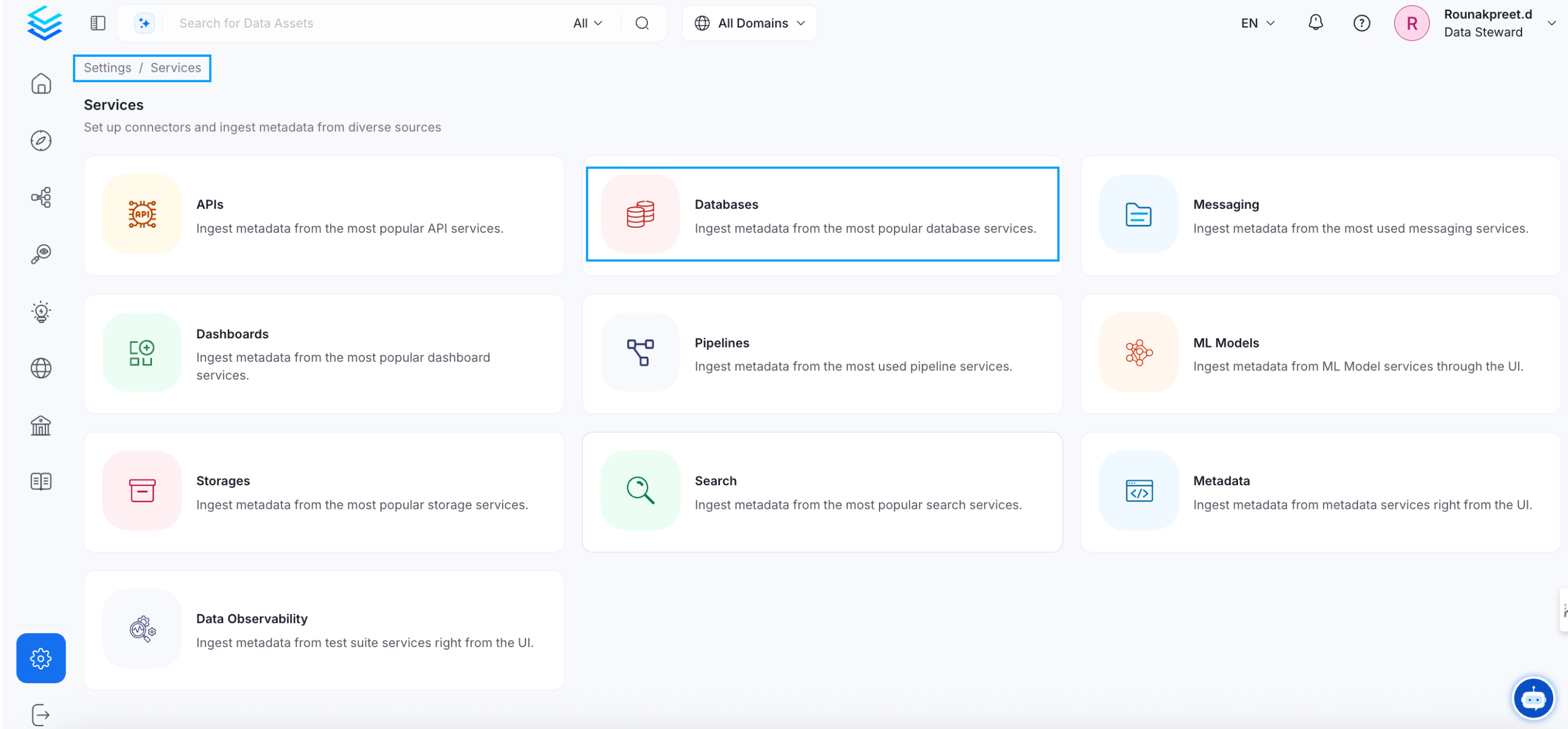

1. Visit the Services Page

Click Settings in the side navigation bar and then Services.

The first step is to ingest the metadata from your sources. To do that, you first need to create a Service connection first.

This Service will be the bridge between OpenMetadata and your source system.

Once a Service is created, it can be used to configure your ingestion workflows.

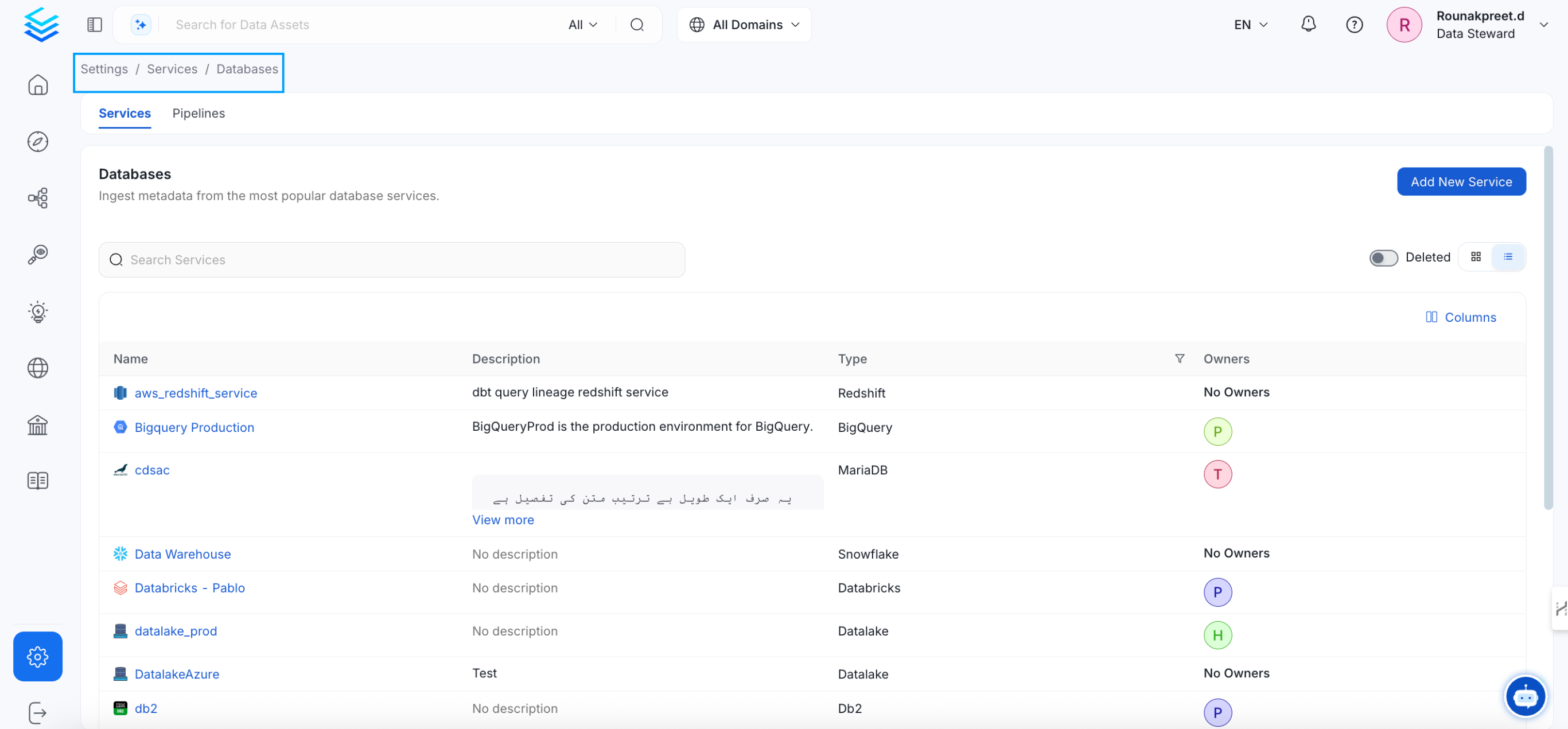

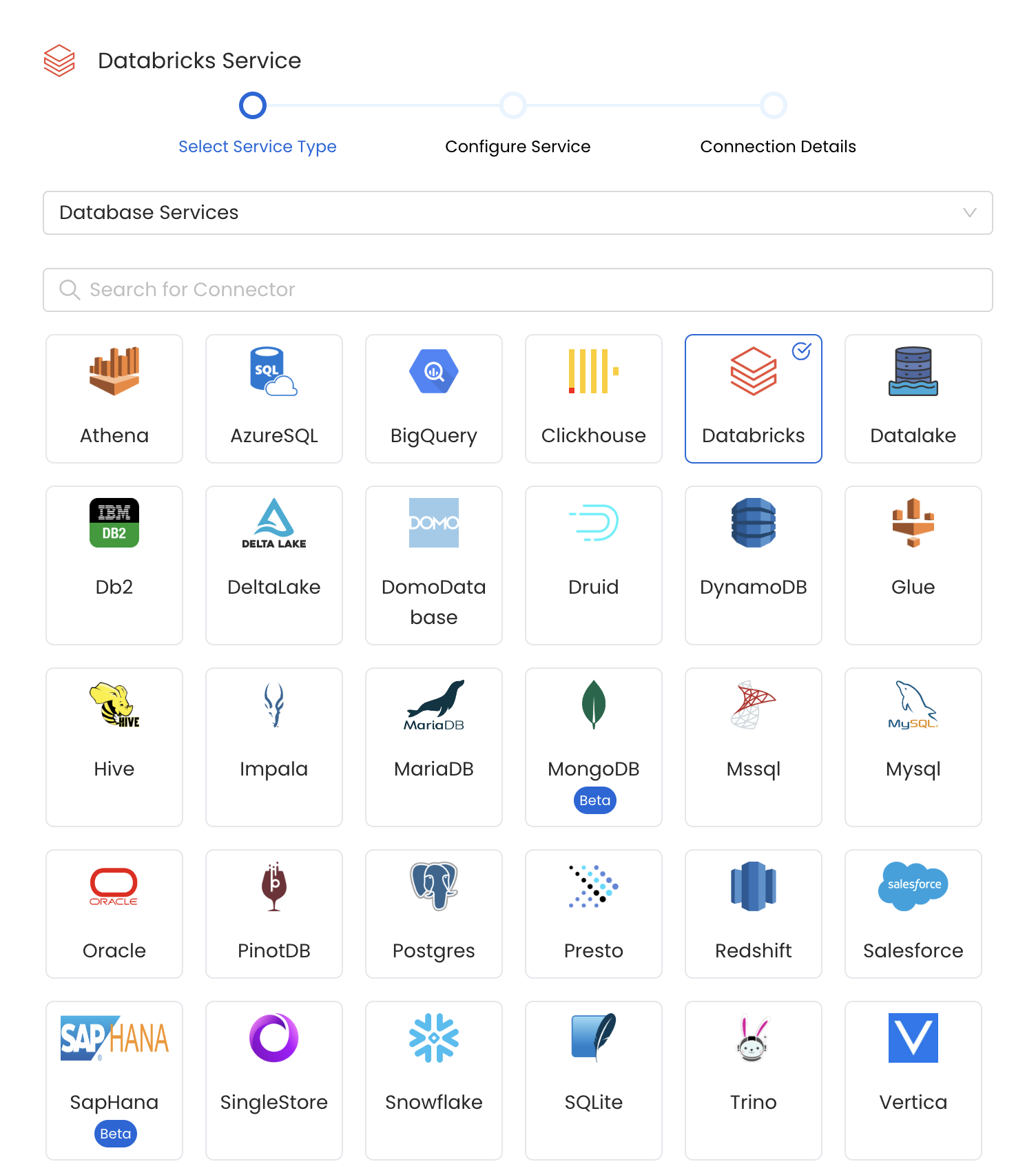

Select your Service Type and Add a New Service

Add a new Service from the Services page

Select your Service from the list

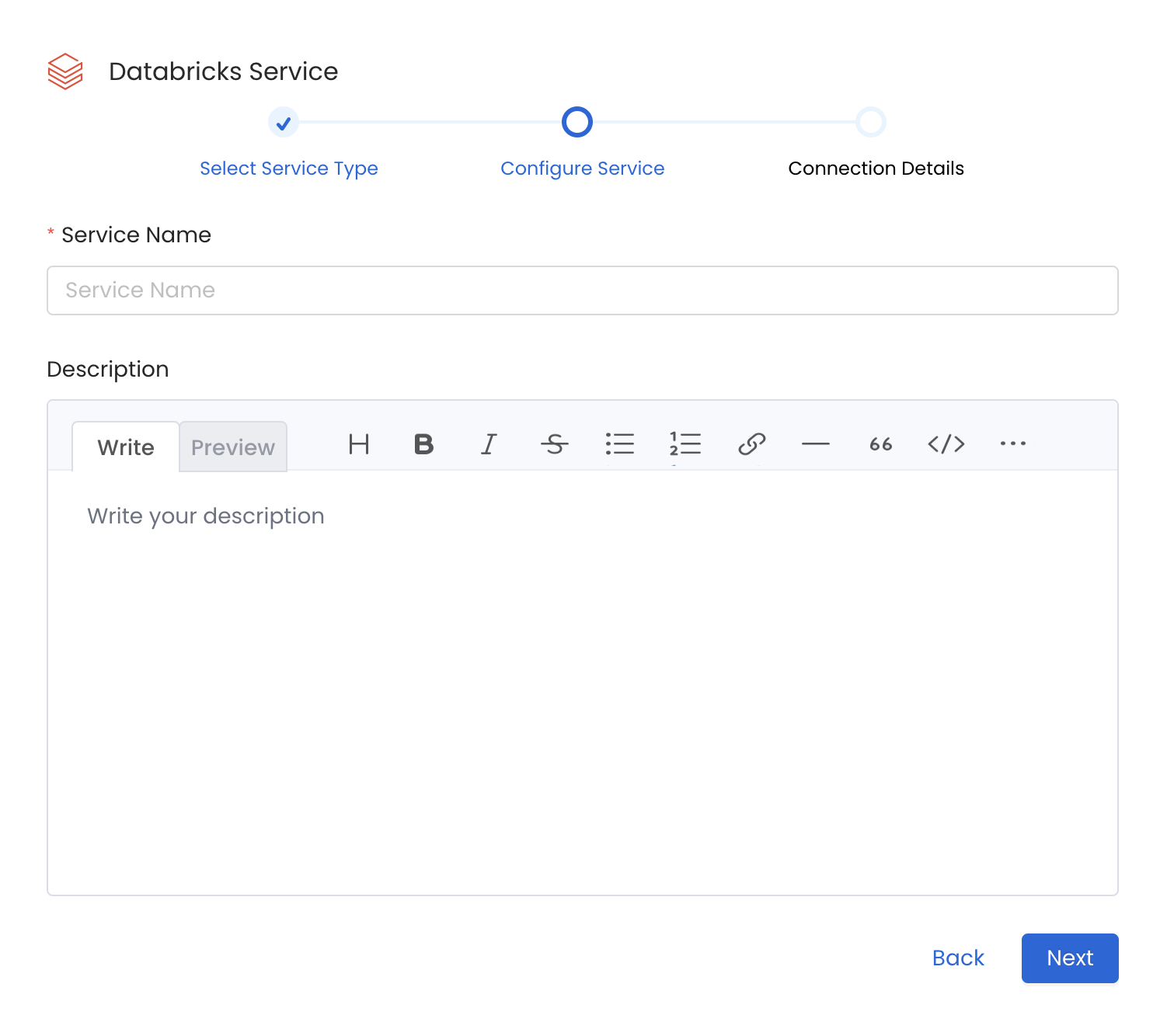

4. Name and Describe your Service

Provide a name and description for your Service.

Service Name

OpenMetadata uniquely identifies Services by their Service Name. Provide a name that distinguishes your deployment from other Services, including the other Databricks Services that you might be ingesting metadata from.

Note that when the name is set, it cannot be changed.

Provide a Name and description for your Service

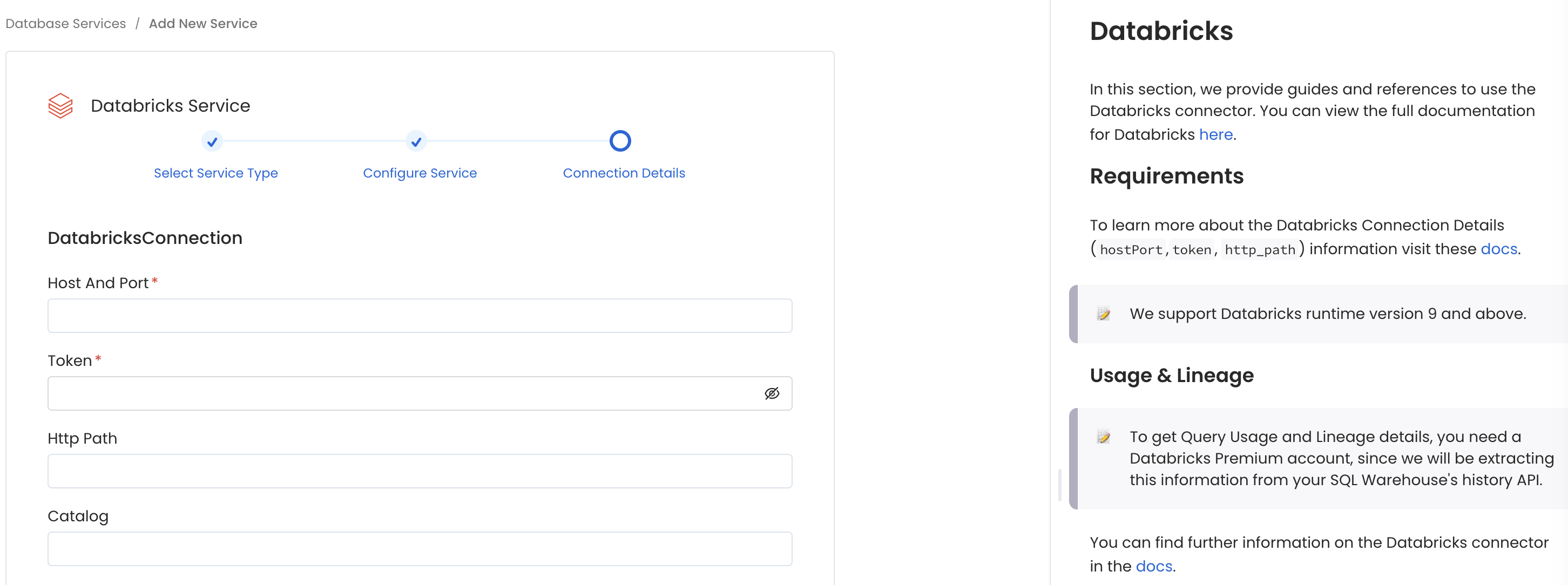

5. Configure the Service Connection

In this step, we will configure the connection settings required for Databricks.

Please follow the instructions below to properly configure the Service to read from your sources. You will also find helper documentation on the right-hand side panel in the UI.

Configure the Service connection by filling the form

Connection Details

Host and Port: Enter the fully qualified hostname and port number for your Databricks deployment in the Host and Port field.

Authentication Type: Choose one of the following authentication methods:

- Personal Access Token: Enter your PAT in the

tokenfield - Databricks OAuth: Provide

clientIdandclientSecretfor Service Principal - Azure AD Setup: Provide

azureClientId,azureClientSecret, andazureTenantId

- Personal Access Token: Enter your PAT in the

HTTP Path: Databricks compute resources URL.

connectionTimeout: The maximum amount of time (in seconds) to wait for a successful connection to the data source. If the connection attempt takes longer than this timeout period, an error will be returned.

Catalog: Catalog of the data source(Example: hive_metastore). This is optional parameter, if you would like to restrict the metadata reading to a single catalog. When left blank, OpenMetadata Ingestion attempts to scan all the catalog.

DatabaseSchema: databaseSchema of the data source. This is optional parameter, if you would like to restrict the metadata reading to a single databaseSchema. When left blank, OpenMetadata Ingestion attempts to scan all the databaseSchema.

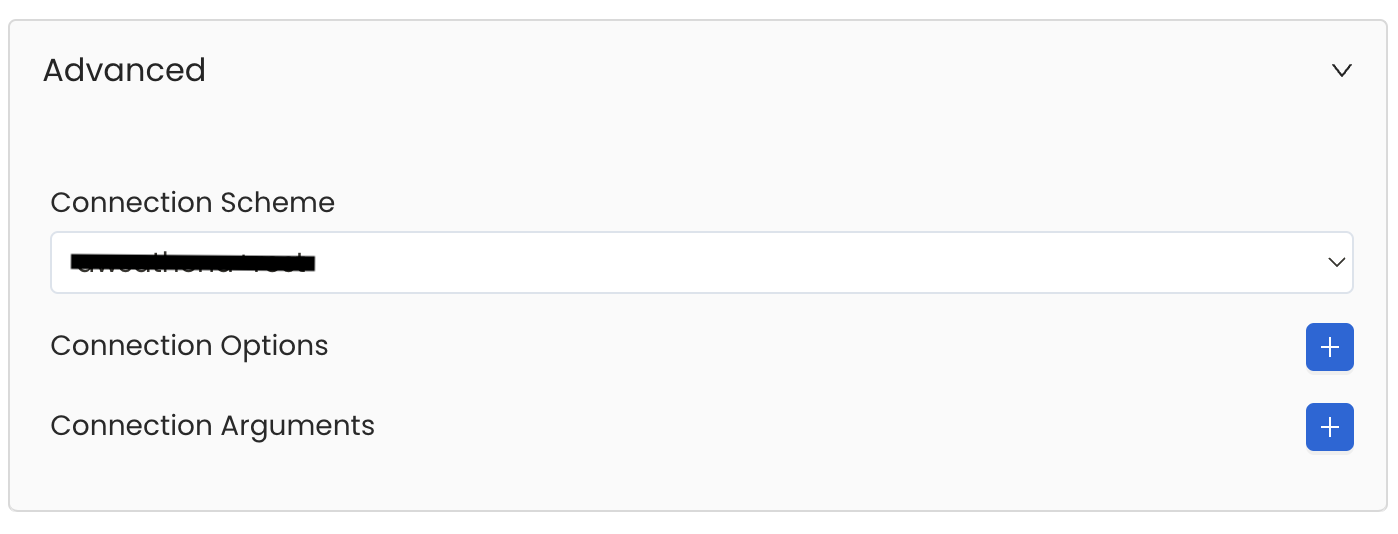

Advanced Configuration

Database Services have an Advanced Configuration section, where you can pass extra arguments to the connector and, if needed, change the connection Scheme.

This would only be required to handle advanced connectivity scenarios or customizations.

- Connection Options (Optional): Enter the details for any additional connection options that can be sent to database during the connection. These details must be added as Key-Value pairs.

- Connection Arguments (Optional): Enter the details for any additional connection arguments such as security or protocol configs that can be sent during the connection. These details must be added as Key-Value pairs.

Advanced Configuration

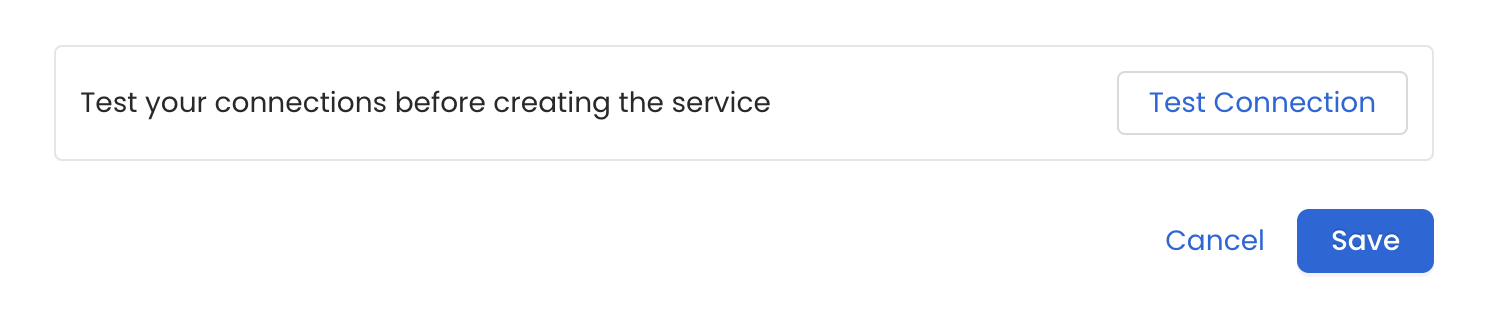

6. Test the Connection

Once the credentials have been added, click on Test Connection and Save the changes.

Test the connection and save the Service

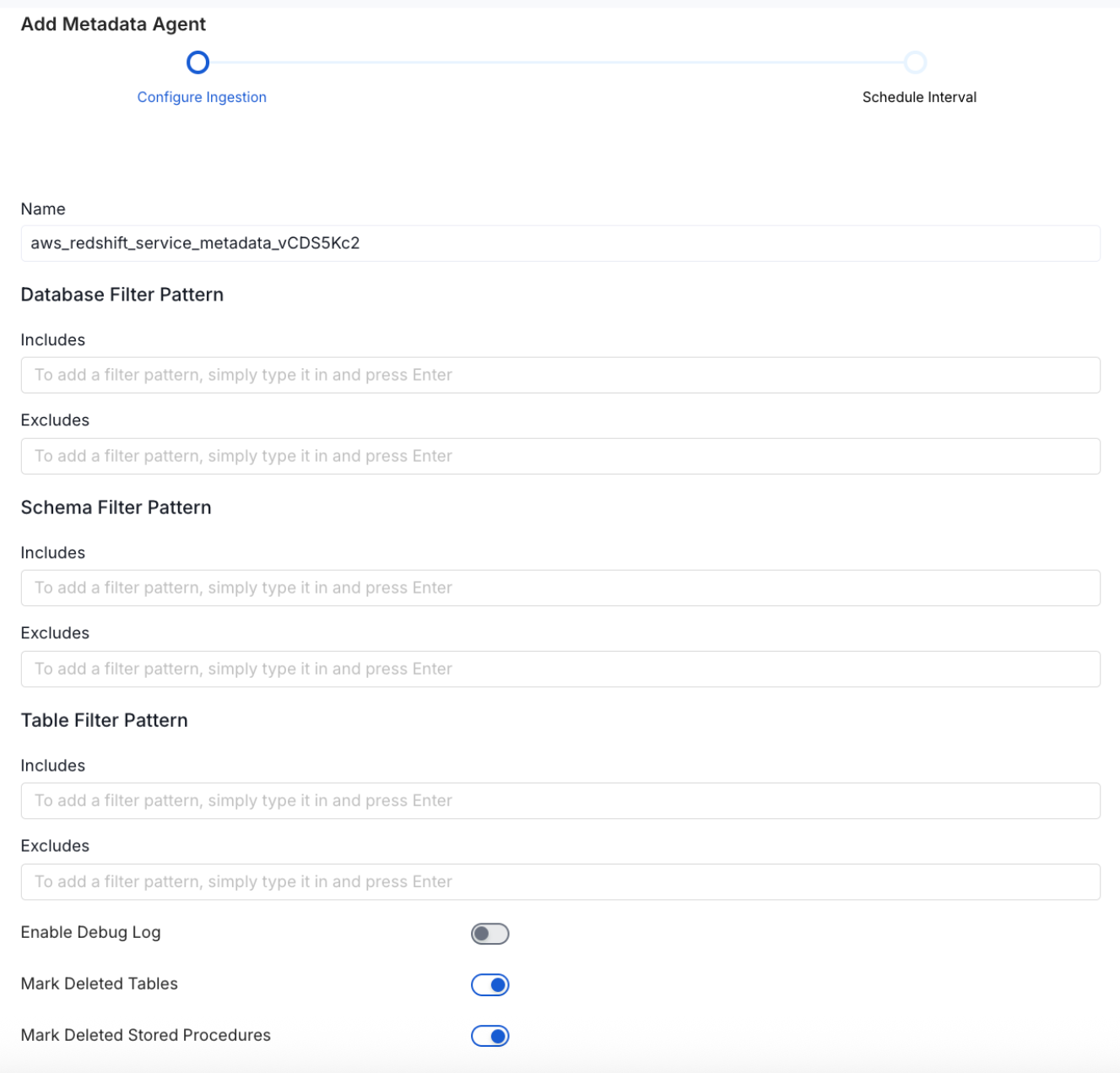

7. Configure Metadata Ingestion

In this step we will configure the metadata ingestion pipeline, Please follow the instructions below

Configure Metadata Ingestion Page - 1

Configure Metadata Ingestion Page - 2

Metadata Ingestion Options

If the owner's name is openmetadata, you need to enter openmetadata@domain.com in the name section of add team/user form, click here for more info.

Name: This field refers to the name of ingestion pipeline, you can customize the name or use the generated name.

Database Filter Pattern (Optional): Use to database filter patterns to control whether or not to include database as part of metadata ingestion.

- Include: Explicitly include databases by adding a list of comma-separated regular expressions to the Include field. OpenMetadata will include all databases with names matching one or more of the supplied regular expressions. All other databases will be excluded.

- Exclude: Explicitly exclude databases by adding a list of comma-separated regular expressions to the Exclude field. OpenMetadata will exclude all databases with names matching one or more of the supplied regular expressions. All other databases will be included.

Schema Filter Pattern (Optional): Use to schema filter patterns to control whether to include schemas as part of metadata ingestion.

- Include: Explicitly include schemas by adding a list of comma-separated regular expressions to the Include field. OpenMetadata will include all schemas with names matching one or more of the supplied regular expressions. All other schemas will be excluded.

- Exclude: Explicitly exclude schemas by adding a list of comma-separated regular expressions to the Exclude field. OpenMetadata will exclude all schemas with names matching one or more of the supplied regular expressions. All other schemas will be included.

Table Filter Pattern (Optional): Use to table filter patterns to control whether to include tables as part of metadata ingestion.

- Include: Explicitly include tables by adding a list of comma-separated regular expressions to the Include field. OpenMetadata will include all tables with names matching one or more of the supplied regular expressions. All other tables will be excluded.

- Exclude: Explicitly exclude tables by adding a list of comma-separated regular expressions to the Exclude field. OpenMetadata will exclude all tables with names matching one or more of the supplied regular expressions. All other tables will be included.

Enable Debug Log (toggle): Set the Enable Debug Log toggle to set the default log level to debug.

Mark Deleted Tables (toggle): Set the Mark Deleted Tables toggle to flag tables as soft-deleted if they are not present anymore in the source system.

Mark Deleted Tables from Filter Only (toggle): Set the Mark Deleted Tables from Filter Only toggle to flag tables as soft-deleted if they are not present anymore within the filtered schema or database only. This flag is useful when you have more than one ingestion pipelines. For example if you have a schema

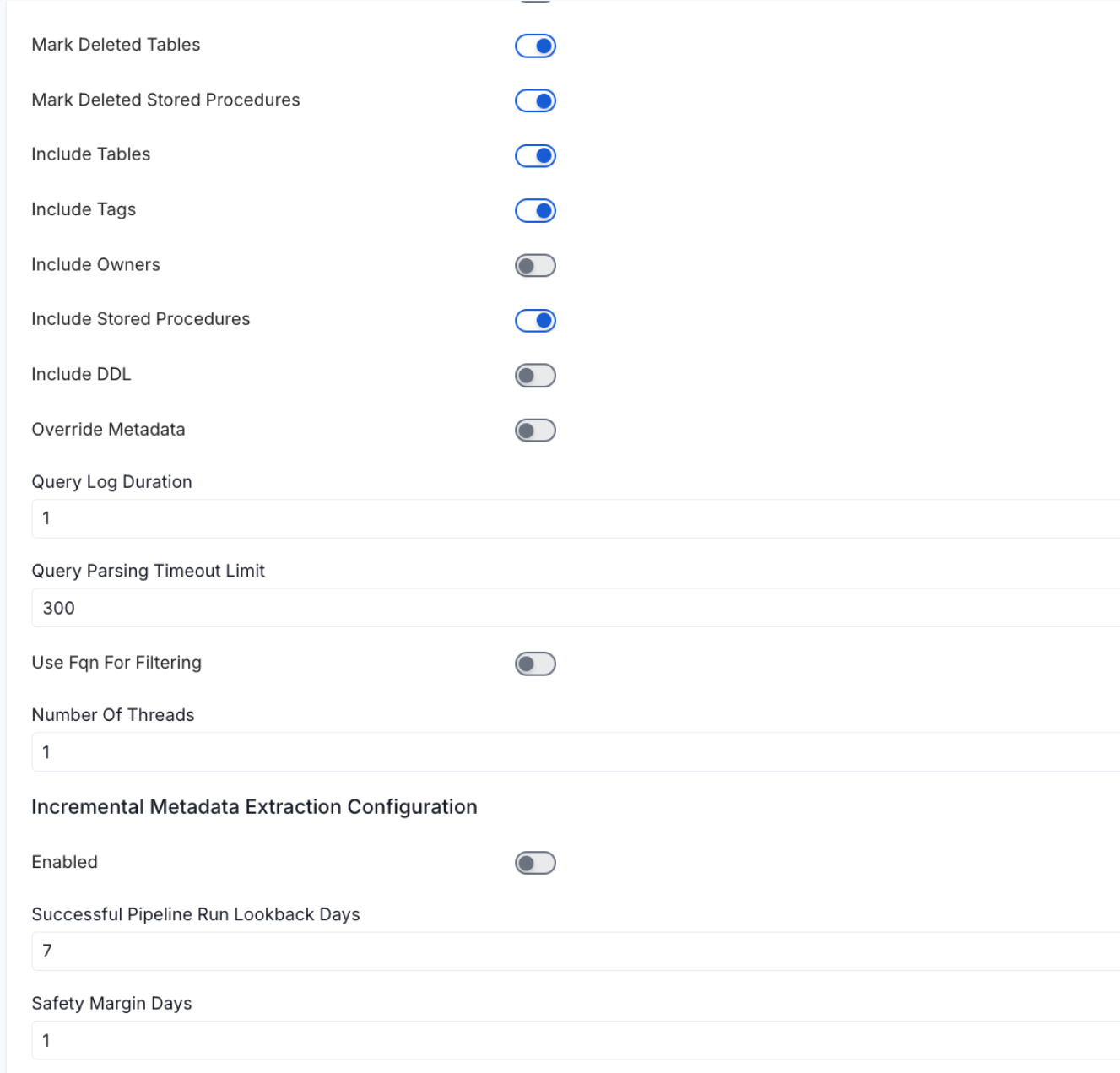

includeTables (toggle): Optional configuration to turn off fetching metadata for tables.

includeViews (toggle): Set the Include views toggle to control whether to include views as part of metadata ingestion.

includeTags (toggle): Set the 'Include Tags' toggle to control whether to include tags as part of metadata ingestion.

includeOwners (toggle): Set the 'Include Owners' toggle to control whether to include owners to the ingested entity if the owner email matches with a user stored in the OM server as part of metadata ingestion. If the ingested entity already exists and has an owner, the owner will not be overwritten.

includeStoredProcedures (toggle): Optional configuration to toggle the Stored Procedures ingestion.

includeDDL (toggle): Optional configuration to toggle the DDL Statements ingestion.

queryLogDuration (Optional): Configuration to tune how far we want to look back in query logs to process Stored Procedures results.

queryParsingTimeoutLimit (Optional): Configuration to set the timeout for parsing the query in seconds.

useFqnForFiltering (toggle): Regex will be applied on fully qualified name (e.g service_name.db_name.schema_name.table_name) instead of raw name (e.g. table_name).

Incremental (Beta): Use Incremental Metadata Extraction after the first execution. This is done by getting the changed tables instead of all of them. Only Available for BigQuery, Redshift and Snowflake

- Enabled: If

True, enables Metadata Extraction to be Incremental. - lookback Days: Number of days to search back for a successful pipeline run. The timestamp of the last found successful pipeline run will be used as a base to search for updated entities.

- Safety Margin Days: Number of days to add to the last successful pipeline run timestamp to search for updated entities.

- Enabled: If

Threads (Beta): Use a Multithread approach for Metadata Extraction. You can define here the number of threads you would like to run concurrently. For further information please check the documentation on Metadata Ingestion - Multithreading

Note that the right-hand side panel in the OpenMetadata UI will also share useful documentation when configuring the ingestion.

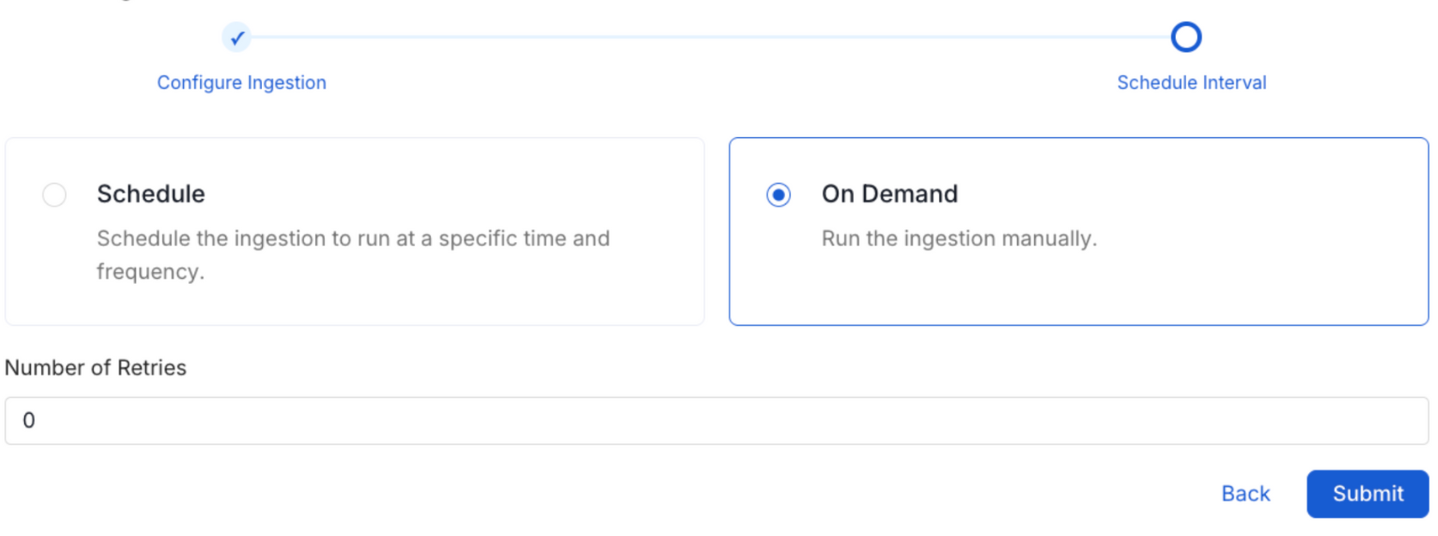

8. Schedule the Ingestion and Deploy

Scheduling can be set up at an hourly, daily, weekly, or manual cadence. The timezone is in UTC. Select a Start Date to schedule for ingestion. It is optional to add an End Date.

Review your configuration settings. If they match what you intended, click Deploy to create the service and schedule metadata ingestion.

If something doesn't look right, click the Back button to return to the appropriate step and change the settings as needed.

After configuring the workflow, you can click on Deploy to create the pipeline.

Schedule the Ingestion Pipeline and Deploy